This is a non-technical survey of approaches to how “deidentification” happens in healthcare, pros and cons of a variety of approaches, and an overview of where privacy-preserving technology is headed. Thanks to privacy technology thought leaders Abel Kho, Andrei Lapets, Dan Barth-Jones, Patrick Baier, and Ian Coe (as well as internal experts Patsy Bailin, Aneesh Kulkarni, and Victor Cai) for ideas and feedback.

In my earlier post, The Fragmentation of Health Data, I gave an overview of where data comes from and how it flows across the healthcare ecosystem. This post will focus on approaches to protecting privacy during those data flows.

In the US, millions of healthcare events take place every day, ranging from patients undergoing lab tests, to patients picking up prescriptions at a pharmacy, to individuals passing away. There are many analytical and public health uses of this data, such as:

- population-level statistics (“How many measles cases are there among 5–10 year olds in California”)

- discovering the effectiveness of treatments (“What is the 10-year survival rate of patients who take a particular drug?”)

- discovering adverse events (“Are cancer rates high among patients who receive a particular medical device?”)

- discovering new targeted therapies

Traditionally (and especially since HIPAA was passed in 1996), these analyses have mostly occurred on top of datasets that have been “deidentified.” Researchers are seeking an answer in aggregate, so they don’t need to know who a patient is — but they need to know the underlying data about each patient to perform an analysis.

As more and more data becomes available, the pace of research relying on linked, deidentified health data has increased; but, at the same time, it has become harder and harder to truly anonymize information. This is causing a public debate around a tradeoff between liquidity of health data for analytical purposes and protection of data for a patient.

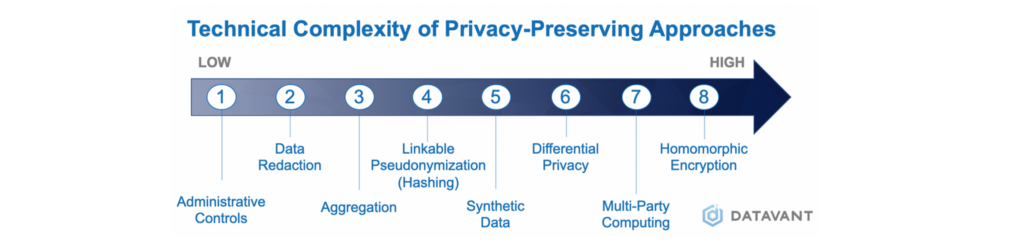

Approaches to Privacy-Preserving Data Sharing

There is renewed attention on areas of research focused on approaches to minimize this tradeoff, developing methods to answer analytical questions without loss of patient privacy. Below is an overview of different approaches that are emerging, the pros and cons of each, and where technology is headed.

Approach 1: Process and Administrative Controls.

Summary: One fundamental form of ensuring that data stays deidentified is to ensure that anyone who has access to the data credibly agrees to not attempt to reidentify it. This can include internal firewalls (agreements that a party won’t combine data with other parts of the organization), audits, controlled access (for example, access to datasets by only certain individuals or access only in a “clean room”), and contracts.

Pros: In most pragmatic use cases, process controls can ensure that data is only used for legitimate research purposes, and that the privacy risks of data exchange are theoretical vs. practical. Combined with other approaches (such as basic redaction), this can significantly reduce the likelihood of actual re-identification.

Cons: This approach leaves open the risk of bad actors or process failure that jeopardizes patient privacy. On the flipside, overly draconian process controls can reduce research and reduce data liquidity.

Approach 2: Data Redaction.

Summary: This is the most common form of de-identification. Institutions start by redacting explicitly identifying information (eg. my name and social security number), then continue by creating categorizations (eg. birth year instead of birthdate), and then removing low frequency data from a dataset (eg. removing extremely rare disease patients from a data set).

“K-anonymization” is the extreme form of redaction, which is an approach that guarantees privacy by saying data should continue being deleted until that there are >K-1 records that look identical (so, for 100-anonymization, a record can only be preserved if there are 99 identical records, and redaction continues until that takes place).

Pros: Basic redaction is simple and has extremely high impact at reducing pragmatic privacy risk; for example, removing name and social security number is an obvious first step on any data set.

Cons: Data redaction can remove valuable information that is useful for analysis. This is especially true for machine learning approaches (where having lots of data is valuable), and this is also very true if data is strictly k-anonymized. For example, a date of service and a diagnosis code can be valuable for computation, but these two data attributes may need to be redacted as “indirect identifiers” depending on the strictness of the deidentification.

Approach 3: Aggregation.

Summary: A simple form of de-identification is to store an aggregate answer, and then delete all underlying records.

Pros: This is a form of “true anonymization” — where all underlying data is removed.

Cons: This is only feasible for fairly simplistic research — it requires knowing a question upfront, and for the answer to that question to be in a single data set. It also removes data needed for substantiating research studies.

Approach 4: Hashing & Linkable Redaction (or, “Pseudonymization”).

Summary: A cryptographic approach known as hashing can be used to indicate whenever two data attributes are the same, without knowing what the underlying attribute means. For example, “Travis May” could match to a consistent hash value “abc123”, which means that whenever that shows up in the multiple data sets, a researcher can know that it’s the same patient, without knowing being able to reverse engineer that “abc123” corresponds with “Travis May.”

Pros: This can solve for redacting identifying information, but while allowing data to be linked across data sets — an important component of any major research study. This method is also specifically approved under HIPAA as a means of de-identified linking (though linked data sets still need to be reviewed for whether the combination of data is itself too identifying).

Cons: Careful key management and process controls must be created to ensure no party can build a “lookup table” or otherwise reverse engineer hashes. Additionally, hashing is only useful for true identity aspects (such as name), but is not useful for de-identifying the attributes about a patient.

Approach 5: Synthetic Data.

Summary: Synthetic data is data that is generated to “look like” real data, but not contain any actual data about individuals. This can be used most frequently as a way to test algorithms and programs.

Pros: No actual data about individuals is in the data set used for analysis, so there is no privacy risk. Meanwhile, most statistics about the data can stay intact (averages, correlations of different variables, etc.)

Cons: While you can synthetically create data that maintains basic summary statistics, nuance in the data can be lost that is relevant to machine learning approaches, targeted quality improvement projects (e.g., identifying at risk populations for whom you want to improve a particular process), and effective clinical trial recruitment. Also, synthetic data approaches don’t allow you to combine data across datasets about the same individual (which is often necessary for longitudinal analysis). Also, approaches generally require knowledge ahead of time what statistical query you want to ask.

Approach 6: Differential Privacy.

Summary: Differential privacy is an approach in which “random noise” is added until it becomes technically impossible to identify any individual in a dataset. For example, within an underlying record, a date might be shifted by a few days, a height might be changed by a few inches, etc.

Pros: Adding random noise can often be less destructive of information than redaction of data to get a similar level of anonymization.

Cons: Differential privacy is computationally complex to do at scale in a way that achieves the desired privacy guarantees while keeping the analysis useful. Some data sets (unstructured data, for example), do not work at all in a differential privacy context. [This is a cutting edge approach which means the cons may be significantly reduced in coming years]

Approach 7: Multi-Party Computing.

Summary: Multi-party computing is an approach in which an analysis can be done on several data sets that different organizations have, without the organizations needing to share the data sets with each other. You might think of this as bringing the analysis to the data rather than bringing the data together for analysis. For example, if a pharmacy knows which drugs were filled, and a hospital knows what drugs were prescribed, multi-party computing can be used to determine what percentage of patients fill their prescriptions, without either party sending patient data to the other. This uses a variety of cryptographic approaches to ensure that no party gets access to more information, except the aggregated answer.

Pros: Can drive valuable analysis without privacy loss. In theory, any analysis can be done in this form.

Cons: Computationally complex and still emerging as a field, meaning computers struggle to run these algorithms quickly and they are difficult to build. Protects inputs and intermediate values in a computation, but outputs may still reveal identifying information (and thus approach can be augmented with differential privacy or another technique). [This is a cutting edge approach which means the cons may be significantly reduced in coming years]

Approach 8: Homomorphic Encryption.

Summary: Homomorphic encryption is an approach in which mathematical operations can be done on top of encrypted data. This means that an algorithm can be performed against data, without actually knowing what the underlying data means. For example, if a hospital knows date of prescription, and a pharmacy knows date the prescription is filled, homomorphic encryption would allow a company to find the time elapsed in between those two dates without knowing the underlying dates.

Pros: Can drive valuable analysis without privacy loss. In theory, any analysis can be done in this form.

Cons: Similar to the cons of multi-party computing, homomorphic encryption is also computationally complex and still emerging as a field, meaning computers struggle to run these algorithms quickly and they are difficult to build (to give a sense, homomorphic encryption is estimated to be >1M times slower than running computations on raw data). Protects inputs and intermediate values in a computation, but outputs may still reveal identifying information (and thus approach can be augmented with differential privacy or another technique). [This is a cutting edge approach which means the cons may be significantly reduced in coming years]

***

Of course, in addition to privacy-preserving technologies, patient consent can and should often be used to engage the patient in the decision to use data that is initially about them. While getting consent can be administratively challenging, researchers often find that patients are remarkably generous with their data if it can help other patients like them.

In practice, every organization should practice data minimization (i.e. redacting or walling off data that isn’t necessary for analysis (like social security numbers at an extreme!)), smart administrative controls, reasonable patient consent (depending on the use case), and some combination of other methodologies. While there are different methods and approaches to privacy-preserving data sharing, at the end of the day, the most important guiding principle is to do well by the patients who entrust institutions with their data.

As the amount of data continues to explode, as the societal value of data-driven analysis continues to grow, and as individuals become more concerned with privacy, expect lots of continued research in approaches to privacy-preserving data methods.

An Overview of Approaches to Privacy-Preserving Data Sharing was originally published in Datavant on Medium, where people are continuing the conversation by highlighting and responding to this story.